With 2019 coming to a close, we took some time to interview Edgybees’ CTO about kicking off 2020.

Question 1: How has Edgybees delivered on its ambitious AR promise and what are some 2020 goals in this area?

Answer: We’ve gotten a lot of mileage, even though we’ve only scratched the surface. We have already achieved accuracy levels of down to one meter, which was the goal we set for ourselves when we set off, but as we the saying goes ‘with the food comes the appetite’ and now we want to drive it even further. We want to be more robust and be able to achieve this even under poor conditions in regards to lighting, obstructions, scenery, or changes between the reference material. One KPI that we feel we are in a good place with is in latency where we already achieve something between 100 – 120 milliseconds, but anything else we’ve done the first 80 percent and now we need to do the next 80 percent.

As for some 2020 goals, as I already said, we want to reduce the dependence on reference imagery. We’ve already started developing the algorithms that will allow us to be self sufficient and be able to function even if we arrive at a new location where we have limited/outdated reference imagery, or no reference imagery at all. We want to strengthen the machine learning side of the house and allow us to understand what we are looking at, specifically with regard to structures, buildings, trees, and so on and make use of these to achieve better accuracy and better augmentation alignment with the scene. We want to be able to understand 3D scenery better so if somebody is on the third floor of a building we’ll be able to know that, if there’s a building of 20 meters height we will be able to understand that too. We want to extend to supporting new materials: currently most of our work is on electro optics visual and infrared, and we want to extend into radar, specifically synthetic aperture radar (SAR). We also want to extend our offering beyond the defense market to industrial and commercial applications, in particular agriculture, infrastructure, oil & gas, mining, and potentially also broadcasting and sports.

Question 2: You mentioned integrating SAR technology with Edgybees, how does that element come into play here?

Answer: SAR images, for lack of a better word, are weird. It doesn’t look like anything else, so currently in order to understand a SAR image the person is required to be very highly trained and professionalized, which limits the possible dissemination and value of SAR images to a very limited audience. We at Edgybees however, make it easy to understand SAR images even to the average joe so now those images have value to a much wider range of users. Couple this with SARs unique ability of being able to see through clouds and other obstructions and see objects on the ground even when lacking visibility to them. Now that we can explain to the users what it is that they are seeing you get a very unique offering.

Question: Could you give an example of a real use case where this could help out?

Answer: I’ll give you 2 examples: Let’s say there is a very large scale fire that caused structural damage to building, some buildings have already collapsed but you cannot see this because the area is covered in smoke. SAR sees through smoke so the user can see what is still standing erect, and which have already toppled down. However due to SAR’s weirdness, when you see the image you cannot really understand what is going on. If we give you a blueprint of the buildings overlayed over the SAR image you could immediately see where it aligns with the walls and where it doesn’t, so you get an understanding of what’s going on with the building.

Another example is for military applications, where you have a camouflage net that is blocking light and there is some equipment underneath it. A commander who wants to communicate with his platoon and assign one team to a certain target, and another to a different one, would need to chatter and explain it to them in a very complicated and hard to understand way. If each of the targets have a designation code and the teams have this picture in their hand, it’s very easy to understand now I need to take target number 4 or 5 or whatever.

Question 3: What is Georegistration and how do you use it for your ends?

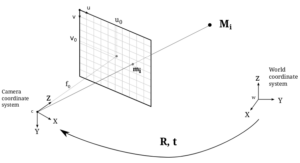

Answer: When you get a video feed, or let’s say a single video frame that we want to add GIS information rectified information into. In simple words I want to put things that have a location in the world into the image accurately. The image usually comes from the aircraft with some position information and this position information describes the location of the aircraft. It is important to note this does not describe the position of the thing that is being filmed but the aircraft itself – the GPS location of the aircraft and the orientation of the camera. All this positioning data is highly inaccurate. GPS is inaccurate, we’re talking about plus-minus 6 meters in the optimal conditions on the horizontal level and up to 40 meters on the vertical. Many people are often not aware but GPS is extremely inaccurate on the vertical level. The orientation of the camera could have an error of up to 10 degrees and the pitch censor could have an error of up to 3-4 degrees. Now if you combine all these, the error we could be encountering could be tens of meters away on the image plane, which of course means that if I try to highlight a specific location, let’s say a junction of two roads I could be highlighting the next junction over. The errors are very big, so in order to have valuable rectification of information over the video we need to have a much better understanding of where the image really is. We do this by aligning the image to reference images. This process is called georegistration and it is an incredibly valuable tool that allows us to perform some of our most important functions at Edgybees.

Question 4: What are your thoughts on the competitive landscape from a technical perspective?

Answer: There are other companies and government institutions offering some of the same functions that we offer, but we don’t see anything competing with us on the same technologies to offer said functions. In simple words, we see them use hardware to add augmentation into the video, we don’t today see anybody using video processing in order to add visual indicators into the video. In this case we are unique because we are agnostic to the video coming in. SAR is a good example of this. We do not require any modification to the payload – the camera or the aircraft – and we can work with video coming in from very light-weight platforms where you simply cannot add additional instrumentation on the hardware. We are not aware of any direct competition, but we are keeping our eyes open because something will turn up sooner or later.